Adventures in Finetuning Stable Diffusion

Some research on image generation, featuring Pokémon and (once again) pre-Columbian codices 👨🎨

Today I’m republishing something from a few weeks ago, first posted here. With a friend from an image generation startup, we finetuned Stable Diffusion models, including the one I used to create the images in last week’s post on the Codex Borgia.

We asked some cool research questions — but I fear that the extremely fast pace of image generation will soon make them obsolete. Already, Stable Diffusion 2.0 has come out and changed several things, including the art of prompting. In a few years, the work below will surely feel adorably outdated. Still, I think this post contains some neat insights around the concept of finetuning, and it is interesting from an archival point of view, which is why I’m putting it on my main blog.

Stable Diffusion took about 150,000 GPU-hours to train from a dataset of billions of images, at a cost of about $600,000. Not necessarily a giant expense for a large company, but definitely out of reach for ordinary people who might want to create their own image generation models.

But ordinary people don’t have to recreate Stable Diffusion from scratch. In machine learning, the process of finetuning is to take an existing model and train it just a little bit, with a moderate amount of new data, so that it becomes better at a specific task.

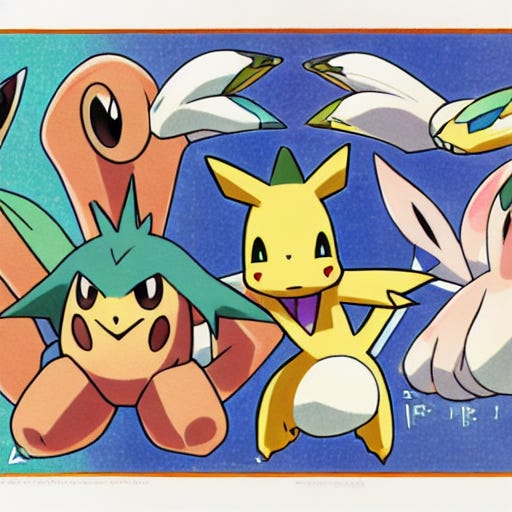

Say you’re trying to generate art for a game. We’ll pretend that it’s a Pokémon game (since Pokémon have been a popular proof of concept among finetuning enthusiasts) and that the art style should be similar to official Pokémon artwork. You load up Stable Diffusion, write the simple prompt “a pokemon”, and get:

This is not… completely and utterly terrible, you could use it as inspiration, maybe. But it’s far from immediately usable, not least because of the weird anatomy or the very recognizable Pokémon logo. One thing you could do is craft a detailed prompt to get something closer to what you have in mind. Let’s try to add the original Pokémon artist with “official artwork of a pokemon by Ken Sugimori” (with the same random seed, so the image should resemble the above somewhat):

Not really any better. We could keep tweaking the prompt to get what we want, and in fact we did (most of our other attempts at prompt engineering were even worse than this). But it can be an annoying and time-consuming process. Besides, it’s difficult to get prompts that generate various images in a consistent style. Yet that’s what you want for your game, which should ideally generate new Pokémon images on the fly.

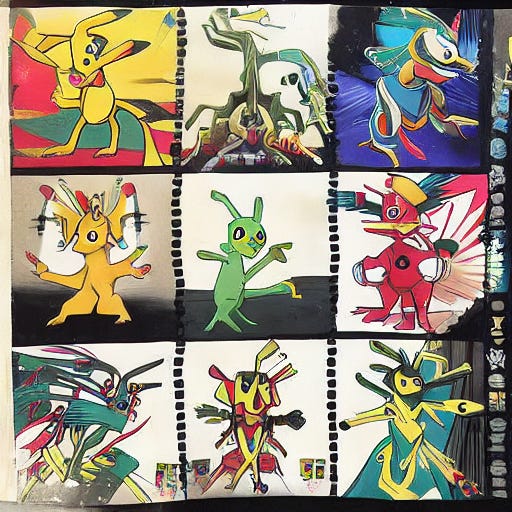

So the other thing you can do is finetuning. Take an instance of the Stable Diffusion model, give it new data in the form of Pokémon official art for all existing Pokémon (that’s several hundred pieces of art and their captions), and then teach the model the style that you want. Now, when you write a simple prompt like “a pokemon”, with the same random seed, you will get something like:

Much better! It’s not 100% perfect, but it looks like a (new) Pokémon in the style of official artwork. It could be used in a game with some tweaks, or even directly if you don’t mind the small flaws. And all it took was a preexisting model, code for the finetuning procedure, a little bit of data, and some GPU computation time.

Let’s look at these requirements one by one.

The model is freely available, or at least one of the existing models is. As of writing this, Stable Diffusion v.1.4 can be downloaded from here.

Code can be tricky to write and debug depending on your knowledge of machine learning engineering, but fortunately you can reuse the scripts from a couple of projects that have released their Python notebooks. (More on that below.)

Data, of course, can be anywhere from trivial to a massive challenge. A key thing to keep in mind is that you need both images and their associated captions to train the model to associate words and pictures. We’ll discuss later in the post the various possibilities to get captions.

Lastly, you’ll need to make a bunch of GPUs perform the necessary computations. This involves some costs, since you’re probably going to rent a machine on a cloud like Vast.ai or Lambda Labs. As we wrote at the beginning of the post, it took about $600,000 to train a model from scratch. Finetuning costs less than that, but how much less? It depends on what data you’re using and what results you’re aiming for, of course, but the answer is generally below $100, sometimes below $10.

This is a pretty incredible discount: 4 or 5 orders of magnitude! And it’s one of the reasons why we think finetuning is really exciting. Many people are already finetuning Stable Diffusion to fit their purposes, and we can only expect that creative uses of this process will become more widespread as time passes.

The rest of this post has two main sections. The first is an overview of existing projects, including a couple of experiments that we carried out ourselves. The second presents some interesting results we found regarding data, captions, training length, and prompting.

One note before we go on: at the moment, the most popular way to finetune a model is probably the somewhat elaborate technique called DreamBooth. For this article we chose to focus on finetuning with the more basic method described above. We’ll say more about why later in the post.

An overview of the field

Waifu Diffusion

To date, the most comprehensive efforts at finetuning Stable Diffusion have been done by the Waifu Diffusion team, who created a model that is able to make female anime-style portraits (a waifu, in anime jargon, is an idealized and attractive female character). The latest iteration of Waifu Diffusion, version 1.3, was released in October 2022.

Here’s a pair of images comparing a single prompt (and random seed) on regular Stable Diffusion and Waifu Diffusion:

Data:

680,000 images with captions

The images come from Danbooru (warning: probably NSFW), an imageboard site on which people upload anime pictures and tag them.

The captions are constructed from the tags. Danbooru images are tagged with a lot of information (see list of tags here), allowing very precise captions and prompts.

Training:

Length: 10 epochs (i.e. full cycles of training), which took about 10 days

Cost: $3,100

Links:

generate Waifu Diffusion images on SparklPaint ✨

For comparison, the previous version, Waifu Diffusion 1.2, had 56,000 images, was trained for 5 epochs, and cost ~$50-100. Work is ongoing to train a 1.4 version from millions of images while also incorporating new finetuning techniques.

Justin Pinkney’s Text to Pokémon Generator

While we were working on our own Pokémon finetuned model, Justin Pinkney from Lambda Labs released another Pokémon generator that became quite popular. Here’s “a pokemon”:

Justin provides us with this really cool gif of the same generations at various stages of training the model:

As we can see, the more the model is trained with the Pokémon dataset, the more the images end up looking like the official art, even when the prompts are unrelated to Pokémon.

Data:

833 images with captions

The images are official Pokémon artwork as collected by FastGAN-pytorch.

The captions were automatically generated with BLIP. BLIP is a model that is able to describe an image with text.

Full dataset available here

Training:

Length: 6 hours (15,000 steps, which is apparently 142 epochs)

Cost: $10

Links:

Replicate (to easily generate images yourself)

Pokédiffusion: Our own Pokémon experiment

Our approach to finetuning Stable Diffusion on Pokémon art differs a bit from Justin Pinkney’s, allowing some interesting comparisons.

We scraped the images directly from Bulbapedia and gave them simple templated captions based on metadata instead of using a tool like BLIP or writing them manually. We also excluded a few images to be able to examine the behavior for real Pokémon that the model hadn’t seen. We then used the Waifu Diffusion code to train for various numbers of epochs in order to see the effects of training length.

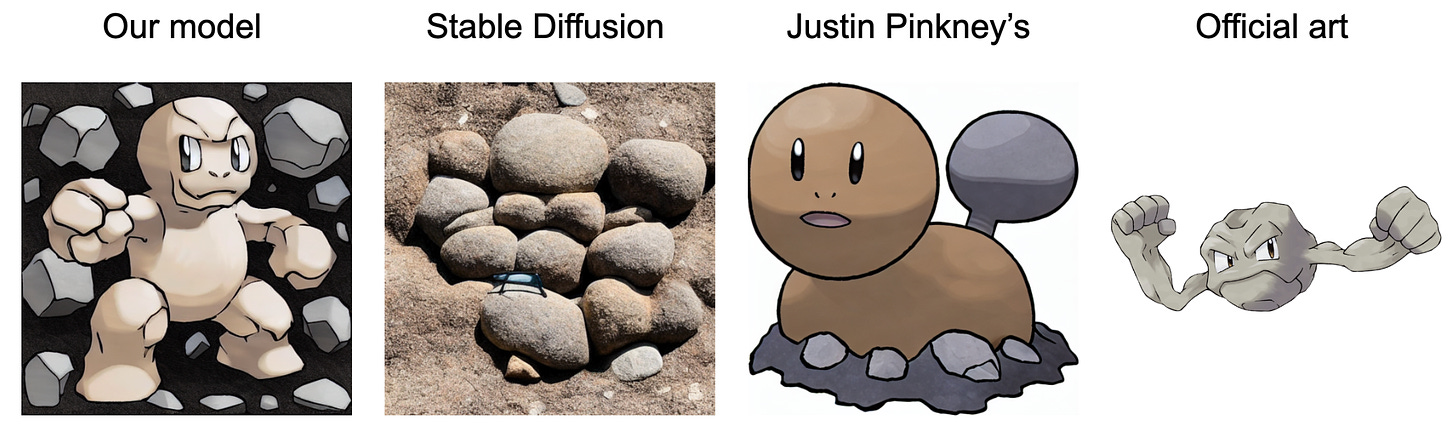

The third image in the introduction is an output of our model. Here are some other comparisons between our model, regular Stable Diffusion, Justin Pinkney’s model, and, where it exists, the real Pokémon art.

“official art of a pokemon Abomasnow, Ice, Grass” (Abomasnow is a real Pokémon that was included in the dataset, see rightmost image):

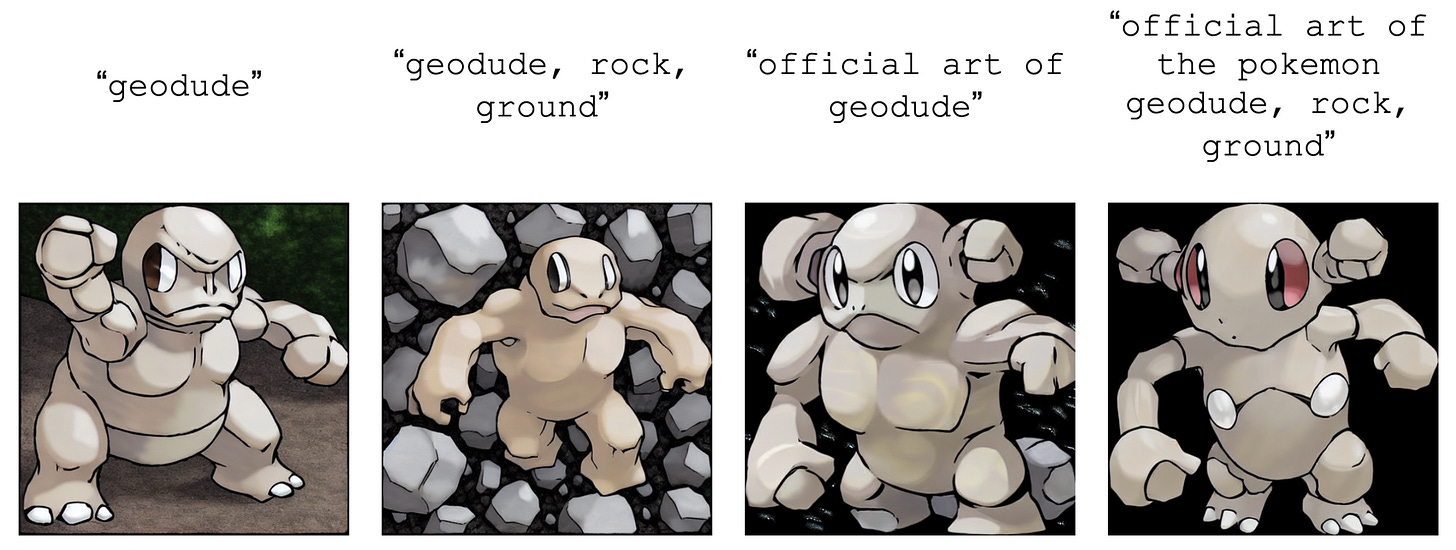

“geodude, rock, ground” (Geodude is a real Pokémon that was not included in the dataset, see rightmost image):

“official art of the pokemon Deepeel, water electric type” (Deepeel is a fictional Pokémon we made up):

“a fire type pokemon, highly detailed, 4k, hyper realistic, dramatic lighting, unreal engine, octane render”:

The parameters of our training were:

Data:

833 images with captions (160 kept for validation)

The images are official Pokémon artwork scraped from Bulbapedia.

The captions follow the template “

official art of pokemon ${pokemon}, ${type1}, ${type2}”, for example “official art of pokemon Bulbasaur, grass, poison”. The types (grass, poison, water, fire, electric, etc.) are an important gameplay concept in Pokémon, and they allow us to automatically put some interesting information in the captions even if we didn’t use a sophisticated generation method.

Training:

Length: we trained the model for 100 epochs with checkpoints at 1, 2, 5, 15, 30, 45, 60, 70, and 100 epochs.

Each epoch took 9 minutes and cost approximately $0.10 on an RTX A6000 machine

Our most trained model therefore took 15 hours and cost approximately $10, although we had good results with the 45-epoch checkpoint, which took less than half that time and cost.

We used a slightly adapted version of the code used in the Waifu Diffusion project.

Links:

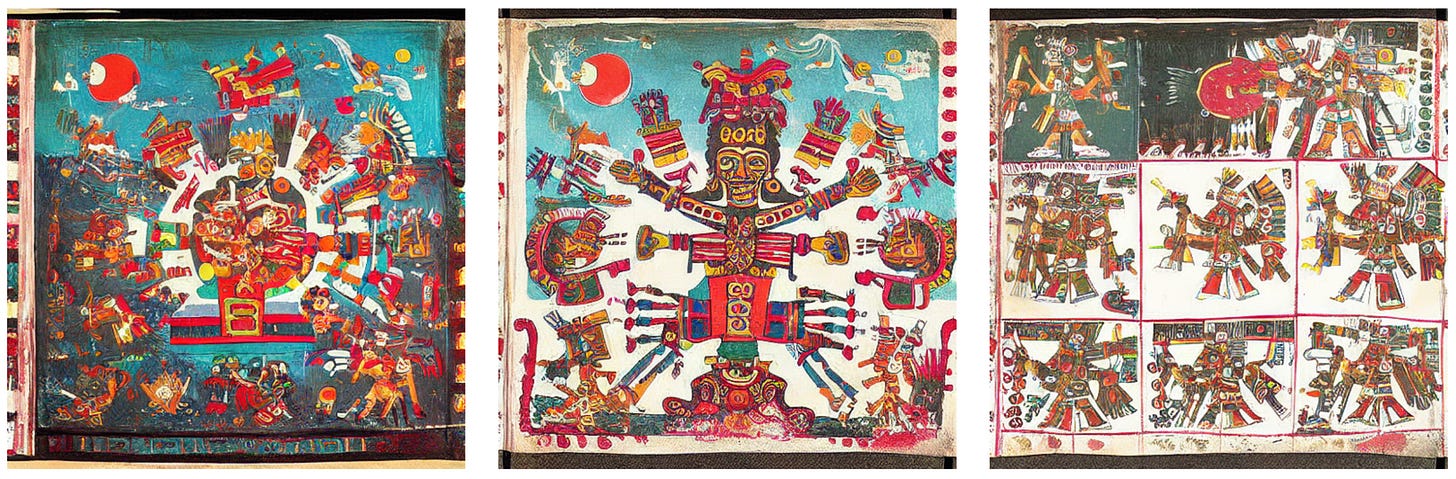

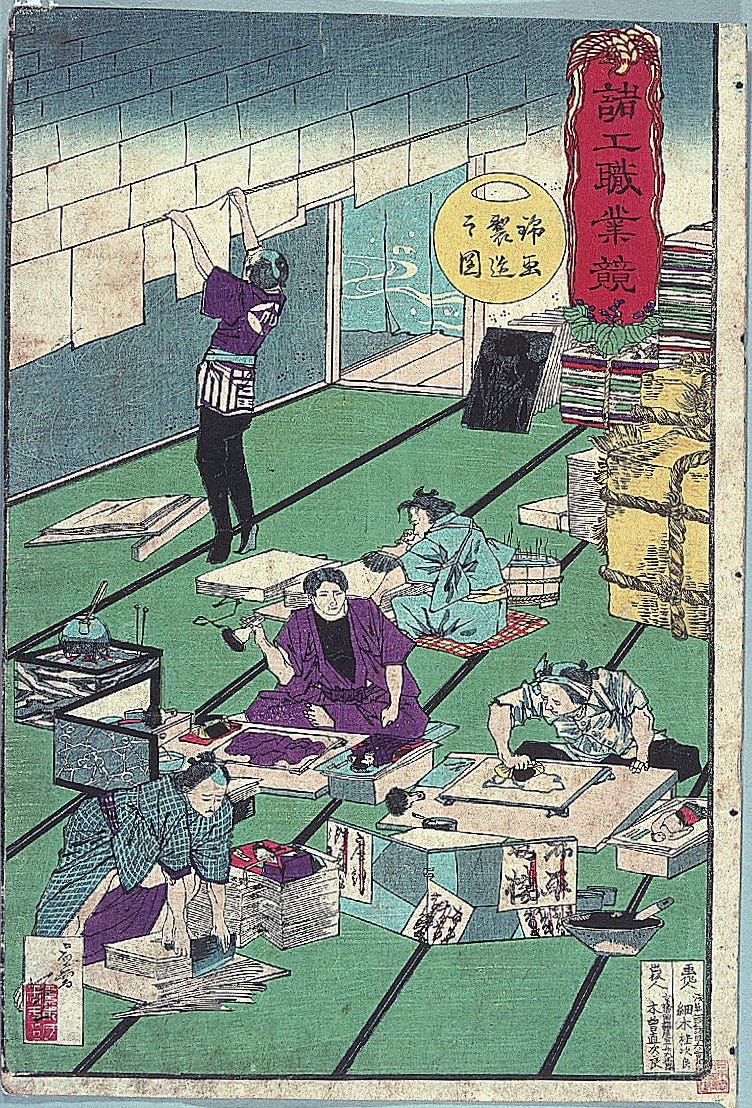

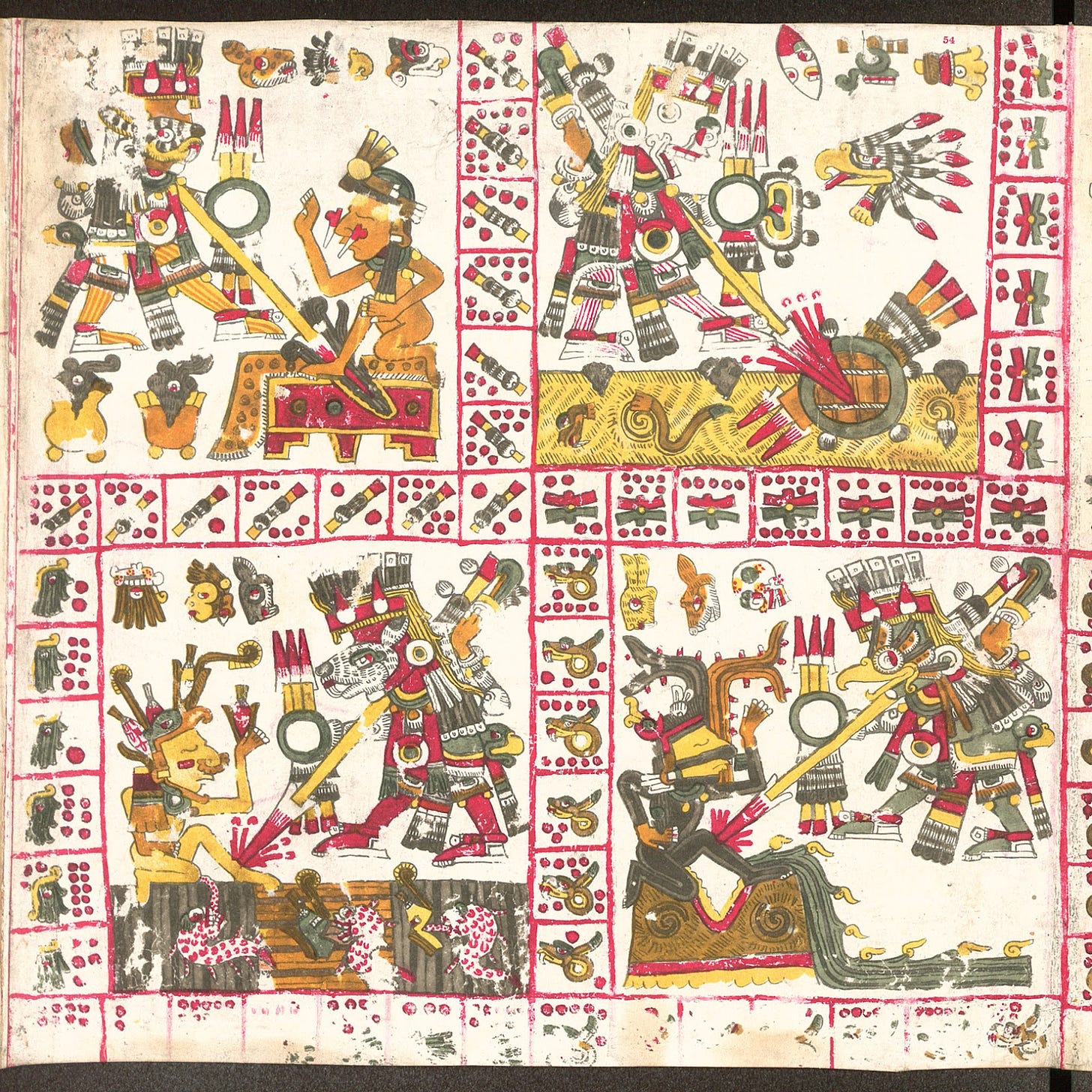

Tonalamatl Diffusion

Leaving Pokémon aside, we also experimented with data that is very different from what has been so far: art from pre-Columbian Mexican civilization.

The Codex Borgia is one of a few manuscripts that survived the 16th-century Spanish conquest. It is a tonalamatl, that is, an almanac used for divinatory purposes, and it contains 76 animal-skin pages covered with drawings related to Mesoamerican mythology and religion (it’s unclear which of the Mesoamerican peoples made it, though; it wasn’t the Aztecs). It is now kept in the Vatican Library after having been in possession of cardinal Stefano Borgia.

We wondered if Stable Diffusion could be finetuned to generate other pages in that style, especially considering that the dataset is small, at only 76 images. (A more ambitious project would incorporate pages from the wider Borgia Group of manuscripts, as well as other Mesoamerican codices.) By default, Stable Diffusion doesn’t seem to know about the Codex Borgia, and interprets it as some old European book:

After finetuning, we get instead things like:

That’s a big improvement, even with so little data! And the small dataset allowed us to examine the effect of various kinds of captions — more on that in the next section.

Data:

76 images with captions

The images were scraped from Wikimedia

We created different types of captions to train as many models:

Templated with page number: “page 56 of the codex borgia”

Human-annotated: we handmade captions based by copying and adapting the descriptions of the pages of the codex on Wikipedia. An example is “page 56 of the mesoamerican codex borgia, showing Mictlantecuhtli and Quetzalcoatl back to back”

Empty: we examined the impact of having empty strings as captions for all pages.

We created other captions using BLIP and a different default, but did not use them. (You can see them here.)

Training:

Length: we trained a model for each caption type, 65 epochs each, with various checkpoints.

We didn’t keep a good record of the time and cost per epoch, but it was on the order of minutes and cents on an RTX A6000 machine.

We used a slightly adapted version of the code used in the Waifu Diffusion project.

Links:

Generate images yourself on Replicate

Noteworthy projects that we’re not covering

NovelAI Diffusion Anime

The company NovelAI, which sells an AI-assisted storytelling service, has begun incorporating a finetuned Stable Diffusion model to their product. Their NovelAI Diffusion Anime model allows you to quickly generate anime-style pictures to a story. One reason to pay attention is that they’re bringing new improvements to finetuning, including changing the architecture of the model.

However, these improvements are rather advanced, and their model is proprietary, which means it is outside the scope of this post. You can read more in this introductory article or this more technical one.

Pony Diffusion

Pony Diffusion is an example of a model that finetunes Waifu Diffusion further. It was trained on an early checkpoint of Waifu Diffusion with 80,000 additional images from a My Little Pony image board.

DreamBooth and Textual Inversion

Recently, someone made a basic web app that allows you to choose from several finetuned Stable Diffusion models, including Waifu Diffusion, Text-to-Pokémon, and Pony Diffusion. But most of the models were finetuned with DreamBooth, perhaps the most popular method to customize Stable Diffusion right now. With just a few images representing an object (such as a character), DreamBooth is able to insert that object in any generated image.

Another method called Textual Inversion has been developed to teach a model new words by associating them with images. Unlike DreamBooth and “regular” finetuning, it doesn’t change the model per se.

Both DreamBooth and Textual Inversion can be seen as ways to make a model good at depicting a single concept. Accordingly, they often require special prompting. By contrast, “regular” finetuning changes the entire model and can be fairly general. Still, DreamBooth has proven surprisingly popular and versatile. We might write more about it in the future.

Some cool findings

Since finetuning text-to-image models is so new, there hasn’t been much research yet, formal or informal. Below we describe some of the interesting results we got from experimenting with the models described above.

Dataset size

The most immediate question for anyone who would finetune Stable Diffusion is, what data should be used, and how much of it?

We didn’t directly study dataset size in our experiments: we would have had to train several models on different fractions of the same dataset. But the projects above show a range of dataset sizes: from the 76 images of the Codex Borgia to the 680,000 ones from Danbooru in Waifu Diffusion (soon to be several million).

We can try comparing Waifu Diffusion 1.2 (left, 56,000 images) with 1.3 (right, 680,000 images):

Even on a prompt like this that doesn’t use many Danbooru tags, the newer version of the model generates an image that is both more anime-like and of better quality. Note however that comparison is imperfect, because there were many changes to version 1.3 other than dataset size.

Our results at least make clear that you don’t need a huge dataset. The Tonalamatl Diffusion model used 76 images and that was quite enough. DreamBooth is able to work well with only 10 images.

Fewer images necessarily means fewer elements that the model can learn, but if the elements you want are contained in your dataset, then there’s no particular reason to want more. In our case, there simply does not exist any page of the Codex Borgia beyond those 76. So if you really needed something that could pass specifically as a Codex Borgia page (considering that other Mesoamerican codices have different styles), then that dataset is optimal.

The one other variable that needs to vary according to dataset size is training length. The less data, the more training cycles (“epochs”) you’ll need to get a result of sufficiently high quality. Of course, less data means shorter cycles.

Captions

Once you have a dataset, the next step is to caption each image in it. Ideally, of course, you’re using images that already come with captions, such as the Danbooru tags for Waifu Diffusion. But if you have to label the images yourself, there are several possible approaches to captioning:

Empty caption for all images

Fixed caption for all images (e.g. “

official art of a pokemon”)Templated captions that are mostly the same but with some filled details based on available metadata (e.g. “

official art of pokemon ${pokemon}, ${type1}, ${type2}”)Automatically generated captions from an AI tool such as BLIP

Human-annotated captions, obviously feasible only for small datasets or at great cost.

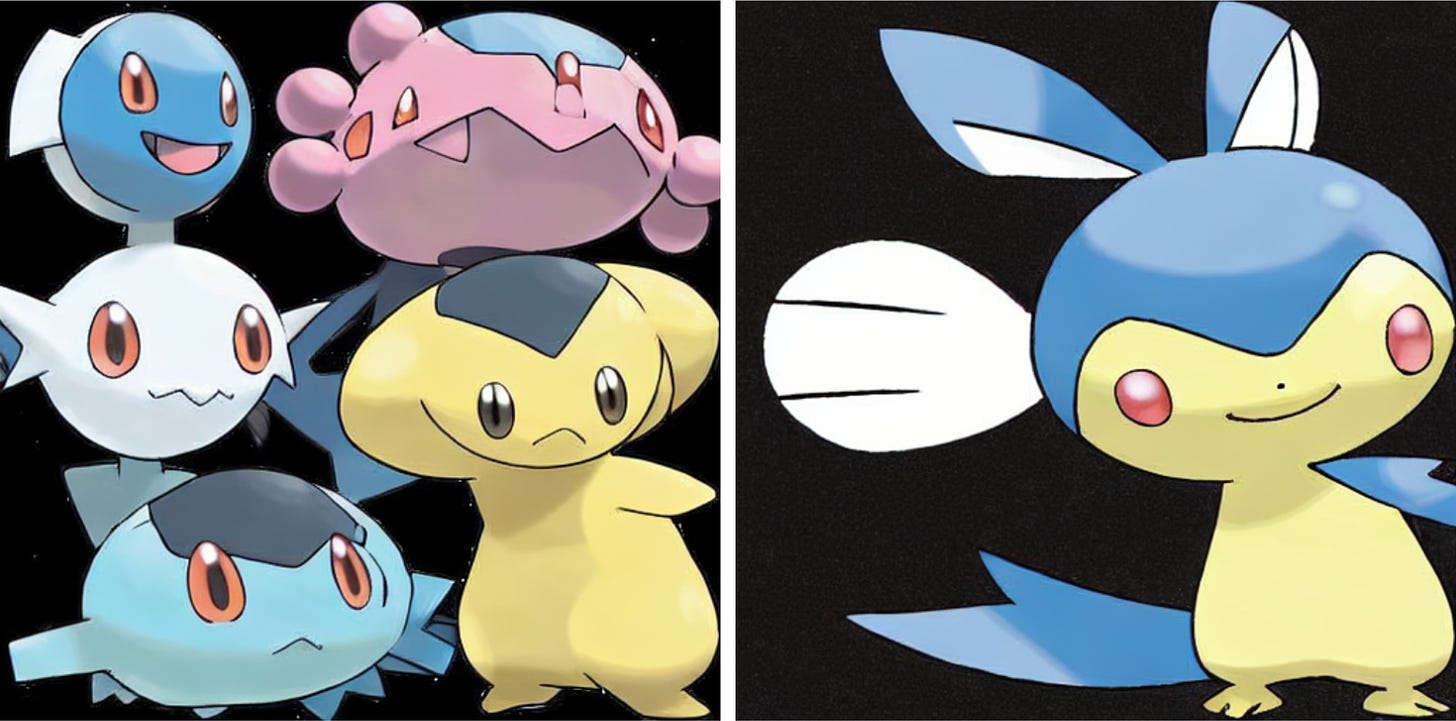

Our Pokédiffusion model used templated captions, while Justin Pinkney’s model used BLIP-generated ones. Does this make a difference? Clearly the output of the two models is not the same, as some of the examples above show, but is there a difference in quality?

It’s not obvious how we would test for that. Both models are able to produce plausible Pokémon. One way we can at least get an idea of the impact of captions is to use the captions directly as prompts to generate images. For instance, consider the mythical psychic Pokémon Mew, whose official artwork is:

In our dataset, this image is captioned “official art of mew, psychic”. In Justin Pinkney’s dataset, it is captioned “a drawing of a pink cat flying through the air”. If we ask both models to generate images using these two captions as prompts, we get:

Our 100-epoch model

Justin Pinkney’s model

official art of mew, psychic

a drawing of a pink cat flying through the air

We see that our model is able to recreate Mew to a reasonable extent when given the caption as prompt. Justin Pinkney’s model doesn’t do as well on that prompt, although it seems to understand that it’s supposed to generate a cat-like creature (the pattern holds when you generate more images with the same prompt).

Meanwhile, the BLIP-generated caption is too generic for our model to do well with it — it creates something too close to a real cat. Justin Pinkney’s model does okay: the feet are close to the original. However, we could also say that it’s good if the model doesn’t exactly recreate existing Pokémon, since that isn’t the point of using image generation in the first place.

These results are of course limited by the important differences between the two models, including training length. They just show that the captions can have an importance when using prompts that are in the same linguistic space.

To get a more direct comparison between different approaches to captioning, we trained our Codex Borgia model three times on the same image dataset, but captioned three different ways: empty, templated, and human-annotated. (We intended to do BLIP as well, but found the resulting captions to be of too low quality — for instance “an old book with a bunch of pictures on it”.)

The empty captions are the empty string “”. The templated captions are almost all identical, different only in page number: “page ${page_number} of the codex borgia”. The human-annotated captions vary but tend to look like “page 54 of the mesoamerican codex borgia, showing Tlahuizcalpantecuhtli, representing the morning star Venus, piercing various characters”.

Here are some results from the three models, trained for 65 epochs. For each prompt, the three images are from the same random seed.

“A new page from the Codex Borgia”:

“Tlahuizcalpantecuhtli, representing the morning star Venus, piercing various characters” (i.e. a prompt very similar to the human-made caption for page 54):

“a list of day signs of the Tonalpohualli, the Central Mexican divinatory calendar, on a damaged page” (i.e. another prompt taken almost verbatim from the human-made captions, in this case from page 1, including the part about damage):

“an aztec ritual to corn and rain”:

“a portrait of barack obama, from the pre-columbian codex borgia, 16th century”:

What to conclude from this sample? We think there is usually an increase in quality and “Codex Borgia-ness” as the captions get more detailed, but in most cases it’s not that noticeable. In the Aztec ritual example, it seems that the manual captions helped the model generate something that looks like a page from the Codex, as opposed to some kind of photograph with the other models. In the Obama example, the manual captions helped refine the style compared to the templated caption model, but the output is otherwise similar.

It seems that the main jump in quality occurs between the no-caption model and the templated caption model, instead of between the templated and the human-annotated. This is a surprising result! There is a much larger difference in captioning effort between the latter two. Almost no effort goes into giving a basic caption like “page 54 of the codex borgia”, and yet this model does significantly better than one where all captions are the empty string.

On the other hand, the no-caption model gave somewhat better results when using an extremely generic prompt, such as “an image”:

The model with the more elaborate captions seems to be less good at capturing the essence of the dataset even for unrelated prompts, because that essence is closely associated with the specific words used in the captions. Whereas the no-caption model has created no such associations, and instead infused the essence of the dataset to the entire model — which is most visible when using a generic prompt that doesn’t interfere with it.

Since the no-caption model often gives decent results, we could say that captioning at all is, in fact, optional. But our main conclusion would be that coming up with simple (templated or possibly fixed) captions, which requires very little effort, is preferable, because it creates word-image associations that you can then use in prompts. Beyond that, using human labor is probably rarely worth it — except perhaps when the dataset is small and teaching the model some concepts such as “Tlahuizcalpantecuhtli” would be useful.

Training length (epochs)

The next crucial question for finetuning is, given a dataset, how much training is needed to attain results of sufficient quality?

Training length is usually measured in epochs. An epoch is done when a machine learning model has gone over all the data and updated its weights according to the learning rate parameter. Multiple epochs mean multiple passes of the entire dataset. During finetuning, we can take a “checkpoint” of the model at any epoch and compare various training lengths together. The gif from Justin Pinkney above shows exactly this.

Let’s take one of our Pokémon generations, again the fictional water/electric-type Deepeel. In pure Stable Diffusion (let’s call it epoch 0), with a new random seed, the prompt “official art of the pokemon Deepeel, water electric type” gives:

Quite abstract. Now we finetune Pokédiffusion for exactly 1 epoch and then generate an image with the same prompt and random seed. The result is:

Okay. It does have the more cartoony feel of official Pokémon art, but it’s not impressive. Clearly we need to train the model more. Let’s try another epoch, for a total of 2:

Nice, our Deepeel now has a recognizable face! And something that looks like an arm! That feels like a substantial improvement from the formless messes of epochs 0 and 1.

Let’s speed up a little until 45 epochs:

It’s difficult to say for sure, but the design seems to be improving at each epoch interval. The rightmost one looks like a weird little alien, but it has a well-defined and interesting shape as well as a reasonable color palette. You could imagine it in a Pokémon game.

One thing to note is that the model started out with a consistent blue-and-pink palette, then after 30 epochs it “experimented” with yellow and blue, and then went with white and blue. In the next iterations, the model seems to have settled on pale blue as the dominant color:

We stopped at 100 epochs, because we had to stop somewhere. You could train these things indefinitely, but at some point there isn’t much improvement in quality. (In fact, if you train for too long, you could overfit and then just produce images very similar to the ones in the dataset no matter what the prompt is.) And we think that in this case, 100 was a good choice — that last Deepeel is cute, has an interesting design, and looks water/electric-type without being too obvious about it. There are a few flaws, like the middle of its body, but it’s not too bad. None of the versions with fewer epochs were even remotely as good looking.

But one of our cool findings is that the subjectively best version of a Pokémon was not always the one from the most trained model. For instance, the “a pokemon” example shown in the introduction was from the 70-epoch model… and turns into this at 100 epochs:

Here’s a case with the prompt “official art of a flying-type pokemon” where the best output seems to be from the 60-epoch model.

Sometimes the best artwork comes from the 45-epoch model. Here’s “official art of a rock ground type pokemon”, where the intriguing, colorful rocky turtle becomes, in our opinion, progressively worse with more epochs:

On the other hand, pre-45 epoch images are almost always worse. Here is the 30-epoch version for the last three examples:

We kind of like the flying-type one — it looks like an electric bat — but we would still say it’s lower-quality than the bird at 60 epochs. Meanwhile that yellow/blue/red/white thing is a mess.

It seems plausible that the following is true: initially, there is consistent improvement in image quality as we add more epochs, but once a certain point is reached (about 45 epochs for the Pokédiffusion model) then more training doesn’t necessarily improve the output. It may improve the output for an individual combination of prompt and seed, and perhaps we would find a pattern if we studied a large sample. But since additional training costs time and money, it’s better to stop somewhere around the number of epochs where the quality plateaus, if you can. Of course, the exact number will depend on your data.

The rock turtle example also shows an interesting phenomenon: it seems to “alternate” between two broad designs. The 60- and 100-epoch versions look like similar gray rocks with beige legs, while the 45- and 70-epoch generations are different and share a color palette. It’s as if a particular random seed were able to generate a few specific designs, and the additional training adds some randomness that can push the model in one direction or the other.

A particularly striking example of design shift is this sequence of Deepeel generations with yet another random seed. From epochs 0 to 45, we see steady improvement towards what we imagine a water/electric fish pokémon to look like…

And then, at epoch 60, surprise!

Although we can sort of see a common color pattern, this is wildly different, all of a sudden. It’s also interesting in that it contains nine small creatures rather than one big one.

Further training (70 epochs) gives a better result with the same layout, and then at epoch 100 we suddenly get a single Pokémon again, in a totally different design from before, but with comparable quality.

All in all, this doesn’t necessarily spell great news for applications of finetuning. Ideally we’d want a training length where the results are consistent. Maybe that length was, say, 150 epochs for our model, and we just never got there. Justin Pinkney’s model, reportedly trained for 142 epochs with a slightly larger dataset than ours, seems somewhat more consistent, including in that gif comparing various checkpoints.

On the other hand, it may not be a big deal if we don’t purposefully compare various epochs. Had we trained for 100 epochs without saving checkpoints, we would never have been aware of any designs but the last. So perhaps the lesson is to remember that a “bad” output is not necessarily a sign that the model is flawed: it can simply be the result of the model “trying out” something different for a specific combination of training length, seed, and prompt.

Prompting

Speaking of prompts, we conclude with some observations on how to ask a finetuned model what to generate. What is the best way to write a prompt?

There certainly is some degree of flexibility. We tried comparing different wordings around the word Geodude, the name of a Pokémon that, as you may recall, was not included in our training set (this is the 100-epoch model):

None of these images — all using the same random seed — is obviously superior to the rest. A very simple prompt like “geodude” works fine (and shows that Stable Diffusion, by default, knows what Geodude is to some extent).

If we remove geodude from the prompt, and just use the types, we are back to the rocky turtle from earlier. And if we just ask for generic Pokémon stuff, we get, as expected, various generic results.

What if we remove all words that can be connected to our training set, and just ask for “an image”?

We get something that is as unspecific as it gets. As discussed in the section on captions, you want to specify at least some words that your finetuned model has learned to associate with images from the dataset. (Well, unless you used an uncaptioned dataset.)

Note, however, that Justin Pinkney’s model does generate the correct artwork style for “an image”, although the results are still quite abstract (see here for a theory of why they look like this):

This is probably due to his model being trained for longer than ours.

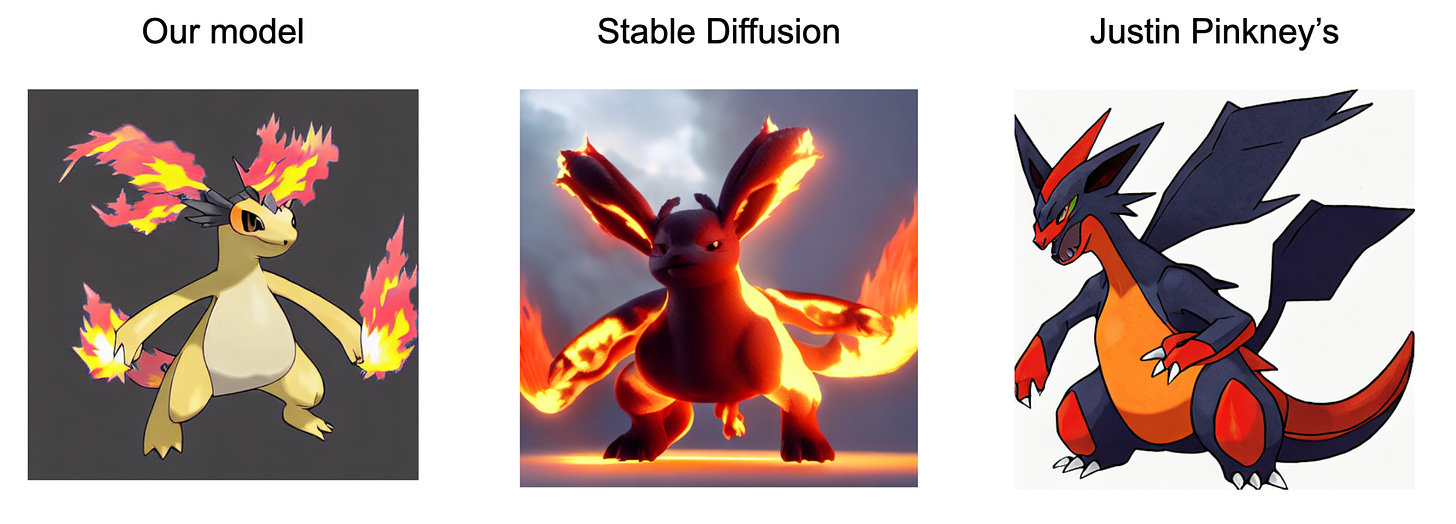

On the opposite side of the spectrum, a prompt could include various words that are irrelevant as far as the model is concerned. For example, consider a prompt with many stylistic cues to create a picture of a 3D object, such as “a fire type pokemon, highly detailed, 4k, hyper realistic, dramatic lighting, unreal engine, octane render”. The words “fire” and “pokemon” are known to the finetuned model, but the rest specify a style that goes against what it was trained on. As a result, we see the gradual disappearance of the specified style as we train more:

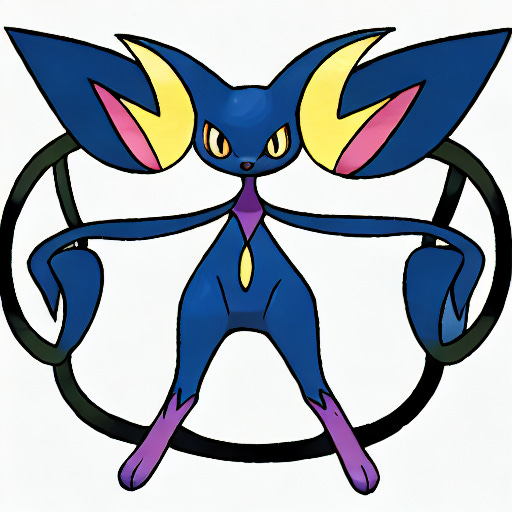

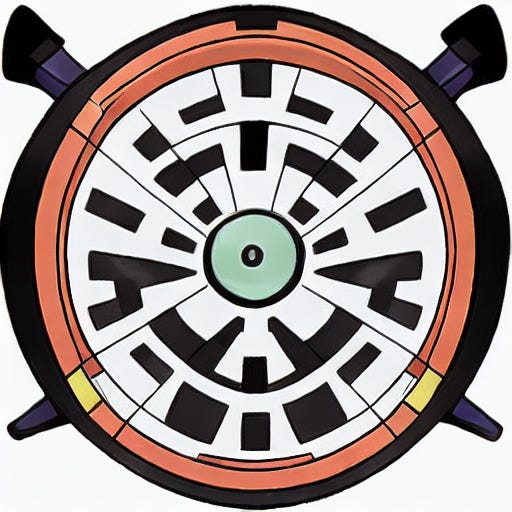

Let’s end with what happens when you ask a finetuned model for things totally unrelated to its training set. After all, much of the fun with text-to-image models comes from combining things that don’t usually go together. What do we get when we ask the Tonalamatl Diffusion model to generate “a pokemon”?

Exactly what you’d expect: weird Mesoamerican Pokémon from an alternate universe in which the Aztecs won.

Thanks to Justin Pinkney for reading a draft of this post, and to V. for making the post at all possible.